Since 1996, the law known unpoetically as Section 230 has governed the liability of millions of websites, blogs, apps, social media platforms, search engines, and online services that host content created by their users. Hundreds of court decisions have created a now-venerable jurisprudence around the statute. But not until this year has the U.S. Supreme Court had an opportunity to consider its first Section 230 cases.

When the court’s 2022-23 term ended in June, policymakers who had anxiously awaited the justices’ pronouncement on the 27-year-old law finally got their answer: Section 230 would be left untouched. As the statute’s co-authors in the 1990s, we are quick to acknowledge that no law is perfect, this one included. But the legal certainty the court’s decisions provide could not come at a more critical time, as artificial intelligence and applications such as ChatGPT raise new questions about who is liable for defamatory or otherwise illegal content on the internet.

The clarity provided by Section 230 will be central to answering those questions for investors in AI and for consumers of all kinds. The law plainly states that it does not protect anyone who creates or develops content, even in part–and generative AI applications such as ChatGPT, by definition, create content.

The two cases the Supreme Court decided this year, Google v. Gonzalez and Twitter v. Taamneh involved claims that the internet giants’ platforms aided and abetted terrorism by unknowingly hosting ISIS videos. By a vote of 9-0, the Court answered, “No.”

The justices pointed out the negative consequences of shifting liability from actual wrongdoers to providers of services generally available to the public. YouTube and Twitter “transmit information by billions of people, most of whom use the platforms for interactions that once took place via mail, on the phone, or in public areas,” they noted. That alone “is insufficient to state a claim … [A] contrary holding would effectively hold any sort of communication provider liable for any sort of wrongdoing.”

Both platforms have strict policies against terrorism-related content but failed to stop the material at issue. Even so, said the Court, while “bad actors like ISIS are able to use platforms like defendants’ for illegal–and sometimes terrible–ends (…) the same could be said of cell phones, email, or the internet generally.”

While Google and Twitter were undoubtedly pleased with this result, they do not have Section 230 to thank. That law was not the reason they escaped liability. Instead, the plaintiffs failed to win on their underlying claims that the platforms caused the harm they suffered.

That very fact demonstrates something about Section 230 that has long been evident. It can be a convenient scapegoat. It has been blamed for platforms’ decisions to moderate too much content, and simultaneously for their failure to moderate enough. However, the leeway that platforms have to make those decisions is not conferred by Section 230. It is the First Amendment that gives them the right to decide how to moderate content on their sites.

With Gonzalez and Taamneh in the rear-view mirror, attention will soon shift to two cases the Supreme Court is likely to take up in its next term. Florida and Texas have both enacted laws giving their attorneys general sweeping power to oversee content moderation decisions by social media platforms. Both laws have been enjoined following lawsuits in the lower federal courts, while petitions for Supreme Court review are pending.

In the meantime, Congress continues to consider legislative nips and tucks to Section 230. It’s important to remember that Congress enacted Section 230 with overwhelming bipartisan support, after lengthy consideration of the many competing interests at stake. Today’s debate is marked by radically differing proposals that would all but cancel each other out. Some proceed from the premise that platforms aren’t sufficiently aggressive in monitoring content; others are based on concerns that platforms already moderate too much. All of them would put the government in charge of deciding what a platform should and should not publish, an approach that in itself raises new problems.

At the same time, we acknowledge that many technology companies face justified criticism for doing too little to keep illegal content off their platforms, and for failing to provide transparency around controversial moderation decisions. Their use and abuse of Americans’ private data is a looming concern. These are all areas where Congress should act decisively.

One thing is certain: Refinements of Section 230 and its legal regime that has governed the internet during its first three decades will soon seem far less challenging in comparison with the truly novel questions surrounding the rapid adoption of artificial intelligence across the internet of the future.

In that sense, 2023 is very much like 1995, when we stood on the shore of the great uncharted ocean that was the World Wide Web. Today, as then, legislators are grappling with complex new issues for which reflexive political answers of the past will not suffice. A happy side effect is that the process of learning together may yield bipartisan results, just as it did in the 1990s when Section 230 was written.

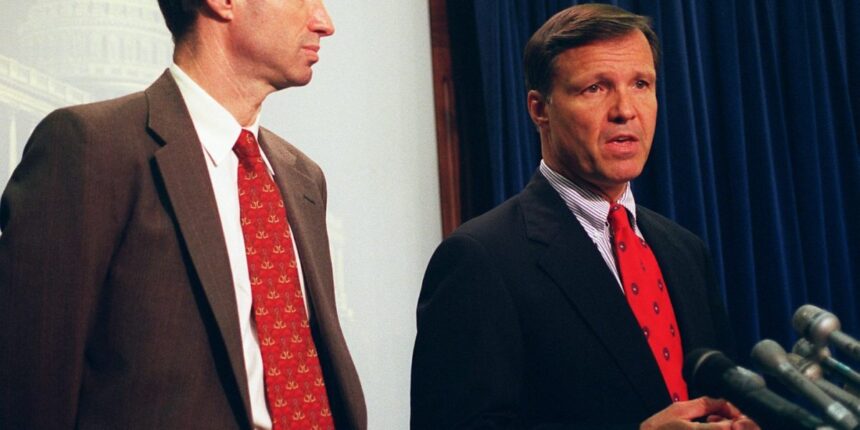

Ron Wyden is a U.S. senator from Oregon, chair of the Senate Finance Committee, and senior member of the Senate Select Committee on Intelligence.

Former U.S. Representative Christopher Cox is an attorney, a director of several for-profit and nonprofit organizations including NetChoice, and author of a forthcoming biography of Woodrow Wilson (Simon & Schuster, 2024).

The opinions expressed in Fortune.com commentary pieces are solely the views of their authors and do not necessarily reflect the opinions and beliefs of Fortune.