Generative AI has already changed the world, but not all that glitters is gold. While consumer interest in the likes of ChatGPT is high, there’s a growing concern among both experts and consumers about the dangers of AI to society. Worries around job loss, data security, misinformation, and discrimination are some of the main areas causing alarm.

AI is the fastest-growing fear in the US, up 26% from only a quarter ago.

AI will no doubt change the way we work, but companies need to be aware of the issues that come with it. In this blog, we’ll explore consumer worries around job and data security, how brands can alleviate concerns, and protect both themselves and consumers from potential risks.

1. Safeguarding generative AI

Generative AI content, like ChatGPT and image creator DALL-E, is quickly becoming part of daily life, with over half of consumers seeing AI-generated content at least weekly. As these tools require massive amounts of data to learn and generate responses, sensitive information can sneak into the mix.

With many moving parts, generative AI platforms let users contribute code in various ways in hopes to improve processes and performance. The downside is that with many contributing, vulnerabilities often go unnoticed, and personal information can be exposed. This exact situation is what happened to ChatGPT in early May 2023.

With over half of consumers saying data breaches would cause them to boycott a brand, data privacy needs to be prioritized. While steps are being made to write laws on AI, in the meantime, brands need to self-impose transparency rules and usage guidelines, and make these known to the public.

2 in 3 consumers want companies that create AI tools to be transparent about how they’re being developed.

Doing so can build brand trust, an especially coveted currency right now. Apart from quality, data security is the most important factor when it comes to trusting brands. With brand loyalty increasingly fragile, brands need to reassure consumers their data is in safe hands.

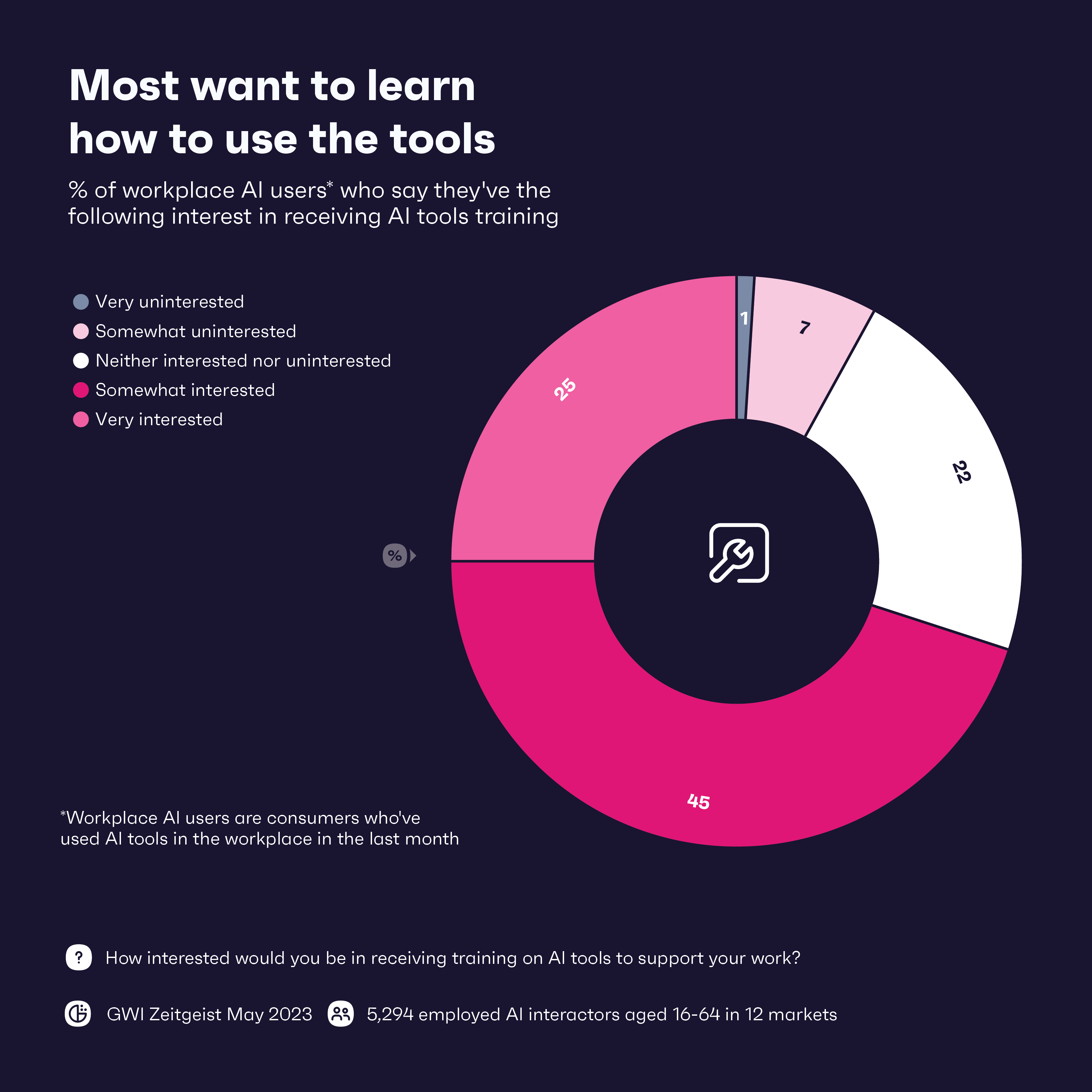

So what’s one of the best ways to go about securing data in the age of AI? The first line of defense is training staff in AI tools, with 71% of workers saying they’d be interested in training. Combining this with data-protection training is equally important. Education is really key here – arming workers with the knowledge needed to ensure data privacy is front-of-mind will go a long way.

2. Keeping it real in a fake news world

Facebook took 4.5 years to reach 100 million users. By comparison, ChatGPT took just over two months to reach that milestone.

As impressive as generative AI’s rise is, it’s been a magnet for fake news creation in the form of audios and videos, known as deepfakes. The tech has already been used to spread misinformation worldwide. Only 29% of consumers are confident in their ability to tell AI-generated content and “real” content apart, which will likely get worse as deepfakes get more sophisticated.

Nearly two-thirds of ChatGPT users say they interact with the tool like they would a real person, which shows how potentially persuasive the tool could be.

But consumers have seen this coming; 64% say they’re concerned that AI tools can be used for unethical purposes. With this concern, and low confidence in detecting deepfakes, it’s brands that can make a difference in protecting consumers from this latest wave of fake news and providing education on how to identify such content.

Brands can start by implementing source verification and conducting due diligence on any information they want to share or promote. In the same vein, they can partner or use in-house fact-checking processes on any news stories they may receive. For most brands, these measures will likely already be in place, as fake news and misinformation have been rampant for years.

But as deepfakes get smarter, brands will need to stay on top of it. To beat them, brands may need to turn to AI once more in the form of AI-based detection tools that can identify and flag AI-generated content. These tools will become a necessity in the age of AI, but it may not be enough as bad actors are usually a step ahead. But, a combination of detection tools and human oversight to correctly interpret context and credibility could thwart the worst of it.

Transparency is also key. Letting consumers know you’re doing something to tackle AI-generated fake news can score trust points with them, and help to set industry standards that can help everyone stay in step against deepfakes.

3. Combatting inherent biases

No one wants a PR nightmare, but that’s a real possibility if brands aren’t double triple checking the information they get from their AI tools. Remember, AI tools learn from data scraped from the internet – data that is full of human biases, errors, and discrimination.

To avoid this, brands should be using diverse and representative datasets to train AI models. While completely eliminating bias and discrimination is near impossible, using a wide range of datasets can help weed some of it out. More consumers are concerned with how AI tools are being developed than not, and some transparency on the topic could make them trust these tools a bit more.

While all brands should care about using unbiased data, certain industries have to be more careful than others. Banking and healthcare brands in particular need to be hyper-aware of how they’re using AI, as these industries have a history of systemic discrimination. According to our data, behavior that causes harm to specific communities is the top reason consumers would boycott a brand, and within the terabytes of data used to train AI tools lies potentially harmful data.

In addition to a detailed review of datasets being used, brands also need humans, preferably ones with diverse, equity, and inclusion (DE&I) training, to oversee the whole process. According to our GWI USA Plus dataset, DE&I is important to 70% of Americans, and they’re more likely to buy from brands that share their values.

4. Striking the right balance with automation in the workplace

Let’s address the elephant in the room. Will AI be a friend or foe to workers? There’s no doubt it’ll change work as we know it but how big AI’s impact on the workplace will be depends on who you ask.

What we do know is that a large majority of workers expect AI to have some sort of impact on their job. Automation of large aspects of employee roles is expected, especially in the tech and manufacturing/logistics industries. On the whole, workers seem excited about AI, with 8 of 12 sectors saying automation will have a positive impact.

On the flip side, nearly 25% of workers see AI as a threat to jobs, and those that work in the travel and health & beauty industries are particularly nervous. Generative AI seems to be improving exponentially every month, so the question is: If AI can take care of mundane tasks now, what comes next?

Even if AI does take some 80 million jobs globally, workers can find ways to use AI effectively to enhance their own skills, even in vulnerable industries. Customer service is set to undergo major upgrades with AI, but it can’t happen without humans. Generative AI can deal with most inquiries, but humans need to be there to handle sensitive information and provide an empathetic touch. Humans can also work with AI to provide more personalized solutions and recommendations, which is especially important in the travel and beauty industries.

AI automating some tasks can free up workers to contribute in other ways. They can dedicate extra time to strategic thinking and coming up with innovative solutions, possibly resulting in new products and services. It will be different for every company and industry, but those who are able to strike the right balance between AI and human workers should thrive in the age of AI.

The final prompt: What you need to know

AI can be powerful, but brands need to be aware of the risks. They’ll need to protect consumer data and be aware of fake news. Transparency will be key. Consumers are nervous around the future of AI, and brands showing them that they’re behaving ethically and responsibly will go a long way.

The tech is exciting, and will likely have a positive impact on the workplace overall. But brands should proceed with caution, and try to strike the right balance between tech and human capital. Employees will need extensive training on ethics, security, and right application, and doing so will elevate their skills. By integrating AI tools to work alongside people, as opposed to outright replacing them, brands can strike a balance which will set them up for the AI-enhanced future.