In 1999 when NVIDIA released NVIDIA GeForce 256 as the world’s first GPU, Jensen Huang, its founder and CEO, would never foresee what two transformational waves of AI, from deep learning to generative AI (genAI), could bring to the paramount achievements being made nowadays. On March 18, 2024, when NVIDIA’s GPU Technology Conference (GTC) for AI hardware and software developers returned to real life, in person, after five years, NVIDIA probably never expected to see even higher enthusiasm from the ecosystem of developers, researchers, and business strategists. Industry analysts including myself had to wait for about an hour to get into the SAP Center, and even being invited into the keynote with reserved seats, we weren’t able to make our way into the area, as it was just too crowded and our seats were not available anymore.

But after watching the keynote at GTC 2024, I’m very sure about one thing: This event, together with the extraordinary efforts made by all technology pioneers of enterprises, vendors, and academia worldwide, is unveiling a new chapter in the AI era, representing the beginning of a significant leap in the AI revolution. With the major announcements in this event spanning AI hardware infrastructure, a next-gen AI-native platform, and enabling AI software across representative application scenarios, NVIDIA paves the way to an enterprise AI-native foundation.

AI Infrastructure: Building On Its Impressive Lead

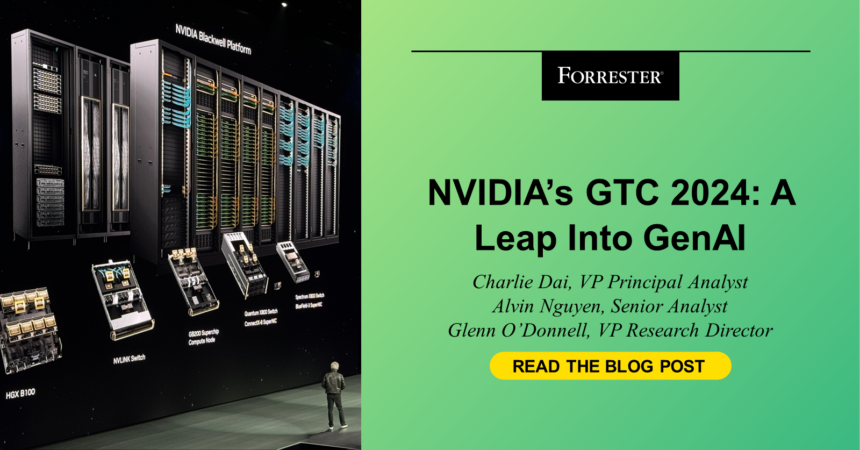

NVIDIA announced a few breakthroughs in AI infrastructure. Some representative new products include:

- Blackwell GPU series. The highly anticipated Blackwell GPU is the successor to NVIDIA’s already highly coveted H100 and H200 GPUs, becoming the world’s most powerful chip for AI workloads. NVIDIA also announced the combination of two Blackwell GPUs with NVIDIA’s Grace CPU to create its GB200 Superchip. This setup is said to offer up to a 30x performance increase compared to the H100 GPU for large language model (LLM) inference workloads with up to 25x greater power efficiency.

- DGX GB200 System. Each DGX GB200 system features 36 NVIDIA GB200 Superchips — which include 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs — connected as one supercomputer via fifth-generation NVIDIA NVLink. The GB200 Superchips deliver up to a 30x performance increase compared to the NVIDIA H100 Tensor Core GPU for LLM inference workloads.

- DGX SuperPOD. The Grace Blackwell-powered DGX SuperPOD features eight or more DGX GB200 systems and can scale to tens of thousands of GB200 Superchips connected via NVIDIA Quantum InfiniBand. For a massive shared memory space to power next-generation AI models, customers can deploy a configuration that connects the 576 Blackwell GPUs in eight DGX GB200 systems connected via NVLink.

What it means: On one hand, these new products represent a significant leap forward in AI computing power and energy efficiency. Once deployed to production, they will not only significantly increase performance for both training and inferencing, enabling researchers and developers to tackle previously impossible problems, but they will also address customer concerns about power consumption. On the other hand, it also means that for existing customers of H100 and H200, the business advantages of their investments will become a limitation compared to its B-series. It also means that the tech vendors that wanted to make money by reselling AI computing power will have to figure out how to ensure ROI.

Enterprise AI Software & Apps: The Next Frontier

NVIDIA already built an integrated software kingdom around CUDA, and it’s strategically building the capabilities of its enterprise AI portfolio, taking a cloud-native approach by leveraging Kubernetes, containers, and microservices with a distributed architecture. This year, with its latest achievements in AI software and applications, I’m calling it “genAI-native” — natively built for genAI development scenarios across training and inferencing and natively optimized for genAI hardware. Some representative ones are as follows:

- NVIDIA NIM to accelerate AI model inferencing through prebuilt containers. NVIDIA NIM is a set of optimized cloud-native microservices designed to simplify deployment of genAI models anywhere. It can be considered as an integrated inferencing platform across six layers: prebuilt container and Helm charts, industry-standard APIs, domain-specific code, optimized inference engines (e.g., Triton Inference Server™ and TensorRT™-LLM), and support for custom models, all based on NVIDIA AI Enterprise runtime. This abstraction will provide a streamlined path for developing AI-powered enterprise applications and deploying AI models in production.

- Offerings to advance development in transportation and healthcare. For transportation, NVIDIA announced that BYD, Hyper, and XPENG have adopted the NVIDIA DRIVE Thor™ centralized car computer to power next-generation consumer and commercial fleets. And for healthcare, NVIDIA announced more than two dozen new microservices for advanced imaging, natural language and speech recognition, and digital biology generation, prediction and simulation.

- Offerings to facilitate innovation in humanoids, 6G, and quantum. For humanoids, NVIDIA announced Project GR00T, a general-purpose foundation model for humanoid robots, to further drive development in robotics and embodied AI. For telcos, it unveiled a 6G research cloud platform to advance AI for radio access network (RAN), consisting of NVIDIA Aerial Omniverse Digital Twin for 6G, NVIDIA Aerial CUDA-Accelerated RAN, and NVIDIA Sionna Neural Radio Framework. And for quantum computing, it launched a quantum simulation platform with a generative quantum eigensolver powered by an LLM to find the ground-state energy of a molecule more quickly and a QC Ware Promethium to tackle complex quantum chemistry problems such as molecular simulation.

- Expanded partnerships with all major hyperscalers, except Alibaba Cloud. In addition to the support of compute instances for the latest chipsets on major hyperscalers, NVIDIA is also expanding partnerships in various domains to accelerate digital transformation. For example, for AWS, Amazon SageMaker will provide integration with NVIDIA NIM to further optimize price performance of foundation models running on GPUs, with more collaboration on healthcare. And NVIDIA NIM is also coming to Azure AI, Google Cloud, and Oracle Cloud for AI deployments, with more initiatives on healthcare, industrial design, and sovereignty with AWS, Google, and Oracle respectively.

What it means: NVIDIA has become a competitive software provider in the enterprise space, especially in areas that are relevant to genAI. Its advantage in AI hardware infrastructure has great potential to influence application architecture and the competitive landscape. However, enterprise decision-makers should also realize that its core strength is still in the hardware, lacking experiences and business solutioning capabilities in complex enterprise software business environments. And its availability of AI software (and also hardware underneath) varies across geographic regions, limiting its regional capabilities to serve local clients.

Looking Ahead

Jensen and all other NVIDIA executives have been trying hard to convince their clients in the past years that NVIDIA is not a GPU company anymore. I would say that this mission is accomplished as of today. In other words, GPU is not what we think it is anymore, but two things are always the same: being obsessed with customer needs and being focused on the IT that drives high business performance. Enterprise decision-makers should keep an eye on NVIDIA’s product roadmap, taking a pragmatic approach to turn bold vision into superior performance.

Of course, this is only a fraction of the announcements at NVIDIA GTC 2024. For more perspective from us (Charlie Dai, Alvin Nguyen, and Glenn O’Donnell) or any other Forrester analyst, book an inquiry or guidance session at inquiry@forrester.com.