Investors are set to assess whether enormous demand for artificial intelligence products can help offset a slump in global sales for computer hardware when Nvidia reports quarterly results on Wednesday.

The US group said in its previous earnings report that demand for its processors for training large language models, such as OpenAI’s ChatGPT, would drive up revenues by nearly two-thirds and help quadruple its earnings per share in the three months to the end of July.

The world’s most valuable chipmaker now plans to at least triple the production of its top H100 AI processor, according to three people close to Nvidia, with shipments of between 1.5mn and 2mn H100s in 2024 representing a massive jump from the 500,000 expected this year.

With AI processors already sold out into 2024, the massive thirst for Nvidia’s chips is hitting the broader market for computing equipment, as big buyers pour investment into AI at the expense of general-purpose servers.

Foxconn, the world’s largest contract electronics manufacturer by revenues, last week forecast very strong demand for AI servers for years to come, but also warned overall server revenues would fall this year.

Lenovo, the biggest computer maker by units shipped, last week reported an 8 per cent revenue drop for the second quarter, which it attributed to soft server demand from cloud service providers (CSPs) and shortages of AI processors (GPUs).

“[CSPs] are shifting their demand from the traditional computers to the AI servers. But unfortunately, the AI server supply is constrained by the GPU supply,” said Yang Yuanqing, Lenovo chief executive.

Taiwan Semiconductor Manufacturing Company, the world’s largest contract chipmaker by revenues and exclusive producer of Nvidia’s cutting-edge AI processors, predicted last month that demand for AI server chips would grow by almost 50 per cent annually for the next five years. However, it said this was not enough to offset downward pressures from the global tech slump caused by an economic downturn.

In the US, cloud service providers such as Microsoft, Amazon and Google, which account for the lion’s share of the global server market, are switching their focus to building up their AI infrastructure.

“The weak overall economic environment is challenging for the US CSPs,” said Angela Hsiang, vice-president at KGI, a Taipei-based brokerage. “Since in AI servers every component needs to be upgraded, the price is a lot higher. The CSPs are aggressively expanding in AI servers, but that was not on the cards when capital expenditure budgets were drafted, so that expansion is cannibalising other spending.”

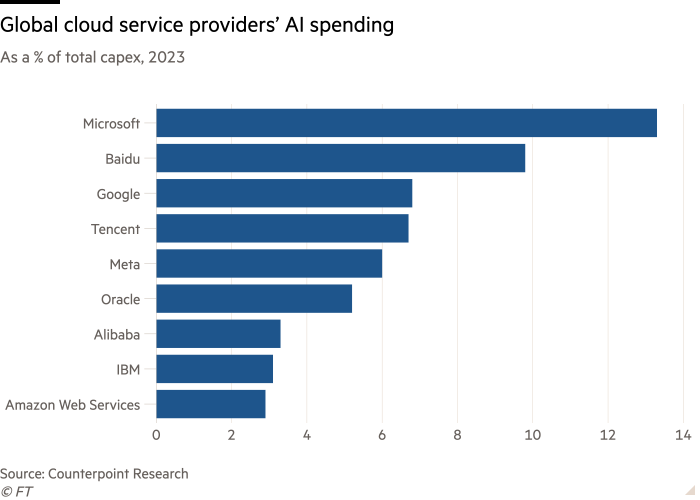

Globally, CSP capital expenditure is expected to grow by just 8 per cent this year, down from almost 25 per cent growth in 2022, according to Counterpoint Research, as interest rates rise and businesses cut back.

Industry research firm TrendForce expects global server shipments to decrease by 6 per cent this year and forecasts a return to only modest growth of 2 per cent to 3 per cent in 2024. It points to a decision by Meta Platforms to slash server purchases by more than 10 per cent to channel investment towards AI hardware, and delays in Microsoft upgrades to its general purpose servers to free up funds for AI server expansion.

Besides the Nvidia chip shortages, analysts point to other bottlenecks in the supply chain that are delaying the AI harvest for the hardware sector.

“There is a capacity shortage both in advanced packaging and in high-bandwidth memory (HBM), both of which are limiting production output,” said Brady Wang, a Counterpoint analyst. TSMC plans to double its capacity for CoWoS, an advanced packaging technology needed to make Nvidia’s H100 processor, but warned the bottleneck would not be resolved until at least the end of 2024. The two main suppliers of HBM are South Korea’s SK Hynix and Samsung.

The Chinese market faces an additional hurdle. Although Chinese CSPs such as Baidu and Tencent are allocating as high a proportion of their investment to AI servers as Google and Meta, their spending is held back by Washington’s export controls on Nvidia’s H100. The alternative for Chinese companies is the H800, a less powerful version of the chip that carries a significantly lower price tag.

A sales manager from Inspur Electronic Information Industry, a leading Chinese server provider, said customers were demanding quick delivery, but manufacturers were experiencing delays. “In the second quarter, we delivered Rmb10bn ($1.4bn) of AI servers and took another Rmb30bn of orders . . . the most troublesome thing is Nvidia’s GPU chips — we never know how much we can get,” he said.

But once the global economy improves and the shortages abate, companies in the server supply chain could reap massive benefits, corporate executives and analysts said.

The KGI brokerage predicts that shipments of servers for training AI algorithms will triple next year, while Dell’Oro, a California-based tech research firm, expects the share of AI servers in the overall server market to rise from 7 per cent last year to about 20 per cent in 2027.

Because of the markedly higher cost of AI servers, “these deployments could constitute over 50 per cent of the total expenditure by 2027”, its analyst Baron Fung said in a recent report.

“For the supply chain, it’s just multiples of everything,” KGI’s Hsiang said. With eight GPUs in one AI server, the demand for baseboards, on which the GPU modules sit, is bound to soar compared with general servers, she said. AI servers also need larger racks on which to position the processor modules.

The much higher power consumption of generative AI servers compared with general purpose ones also creates the need for different cooling systems and new specifications for power supplies.

Foxconn could be among the main beneficiaries of the shift because the group offers everything from the various components to final assembly. Its affiliate, Foxconn Industrial Internet, is already the exclusive provider of Nvidia’s GPU module.

For WiWynn, an affiliate of Foxconn competitor Wistron that specialises in servers, AI orders are already accounting for 50 per cent of revenues, more than double the proportion seen last year, according to Goldman Sachs.

Analysts also see a strong upside for providers of components. Taiwanese printed circuit board (PCB) maker Gold Circuit Electronics could see AI servers jump from less than 3 per cent of its revenues this year to as much as 38 per cent, Goldman Sachs said in a report in June — an expectation driven by the sevenfold increase in PCB content in AI servers over general purpose servers.